Note

This page was generated from notebooks/input_matrices_and_tensors.ipynb.

[ ]:

import numpy as np

import scipy.sparse as sp

import smurff

import logging

logging.basicConfig(level = logging.INFO)

Input to SMURFF¶

In this notebook we will look at how to provide input to SMURFF with dense and sparse matrices;

SMURFF accepts the following matrix files for train, test and side-info data:

- for dense matrix or tensor input: numpy.ndarrays

- for sparse matrices input: scipy Sparse matrices in COO, CSR or CSC format

- for sparse tensors: a wrapper around a pandas.DataFrame

Let’s have a look on how this could work.

Dense Train Input¶

[ ]:

# dense input

Ydense = np.random.rand(10, 20)

trainSession = smurff.TrainSession(burnin = 5, nsamples = 5)

trainSession.addTrainAndTest(Ydense)

trainSession.run()

Sparse Matrix Input¶

The so-called zero elements in sparse matrices can either represent

- missing values, also called ‘unknown’ or ‘not-available’ (NA) values.

- actual zero values, to optimize the space that stores the matrix

Important: * when calling addTrainAndTest(Ytrain, Ytest, is_scarce) the is_scarce refers to the Ytrain matrix. Ytest is always scarce. * when calling addSideInfo(mode, sideinfoMatrix) with a sparse sideinfoMatrix, this matrix is always fully known.

[ ]:

# sparse matrix input with 20% zeros (fully known)

Ysparse = sp.rand(15, 10, 0.2)

trainSession = smurff.TrainSession(burnin = 5, nsamples = 5)

trainSession.addTrainAndTest(Ysparse, is_scarce = False)

trainSession.run()

[ ]:

# sparse matrix input with unknowns (the default)

Yscarce = sp.rand(15, 10, 0.2)

trainSession = smurff.TrainSession(burnin = 5, nsamples = 5)

trainSession.addTrainAndTest(Yscarce, is_scarce = True)

trainSession.run()

Tensor input¶

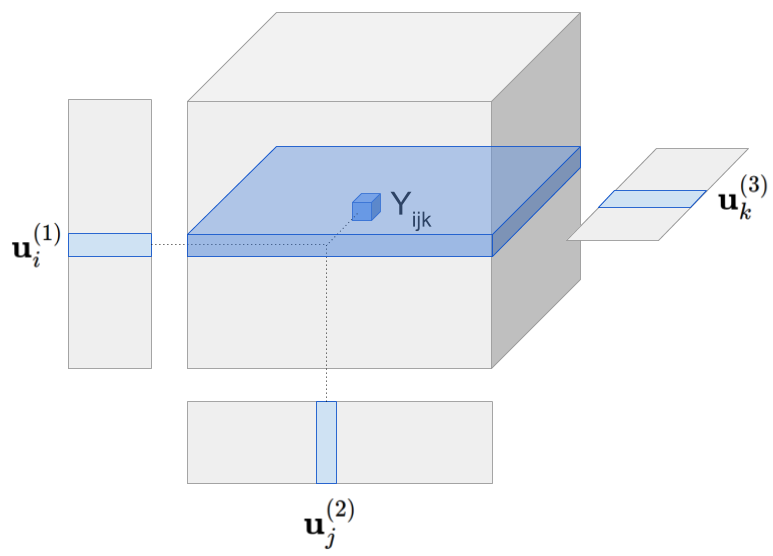

SMURFF also supports tensor factorization with and without side information on any of the modes. Tensor can be thought as generalization of matrix to relations with more than two items. For example 3-tensor of drug x cell x gene could express the effect of a drug on the given cell and gene. In this case the prediction for the element Yhat[i,j,k]* is given by

Visually the model can be represented as follows:

Tensor model predicts Yhat[i,j,k] by multiplying all latent vectors together element-wise and then taking the sum along the latent dimension (figure omits the global mean).

For tensors SMURFF implements a SparseTensor class. SparseTensor is a wrapper around a pandas DataFrame where each row stores the coordinate and the value of a known cell in the tensor. Specifically, the integer columns in the DataFrame give the coordinate of the cell and float (or double) column stores the value in the cell (the order of the columns does not matter). The coordinates are 0-based. The shape of the SparseTensor can be provided, otherwise it is inferred from the

maximum index in each mode.

Here is a simple toy example with factorizing a 3-tensor with side information on the first mode.

[ ]:

import numpy as np

import pandas as pd

import scipy.sparse

import smurff

import itertools

## generating toy data

A = np.random.randn(15, 2)

B = np.random.randn(3, 2)

C = np.random.randn(2, 2)

idx = list( itertools.product(np.arange(A.shape[0]),

np.arange(B.shape[0]),

np.arange(C.shape[0])) )

df = pd.DataFrame( np.asarray(idx), columns=["A", "B", "C"])

df["value"] = np.array([ np.sum(A[i[0], :] * B[i[1], :] * C[i[2], :]) for i in idx ])

## assigning 20% of the cells to test set

Ytrain, Ytest = smurff.make_train_test(df, 0.2)

print("Ytrain = ", Ytrain)

## for artificial dataset using small values for burnin, nsamples and num_latents is fine

predictions = smurff.BPMFSession(

Ytrain=Ytrain,

Ytest=Ytest,

num_latent=4,

burnin=20,

nsamples=20).run()

print("First prediction of Ytest tensor: ", predictions[0])

[ ]: